Claudia Pérez D’Arpino, MIT

4/11/18

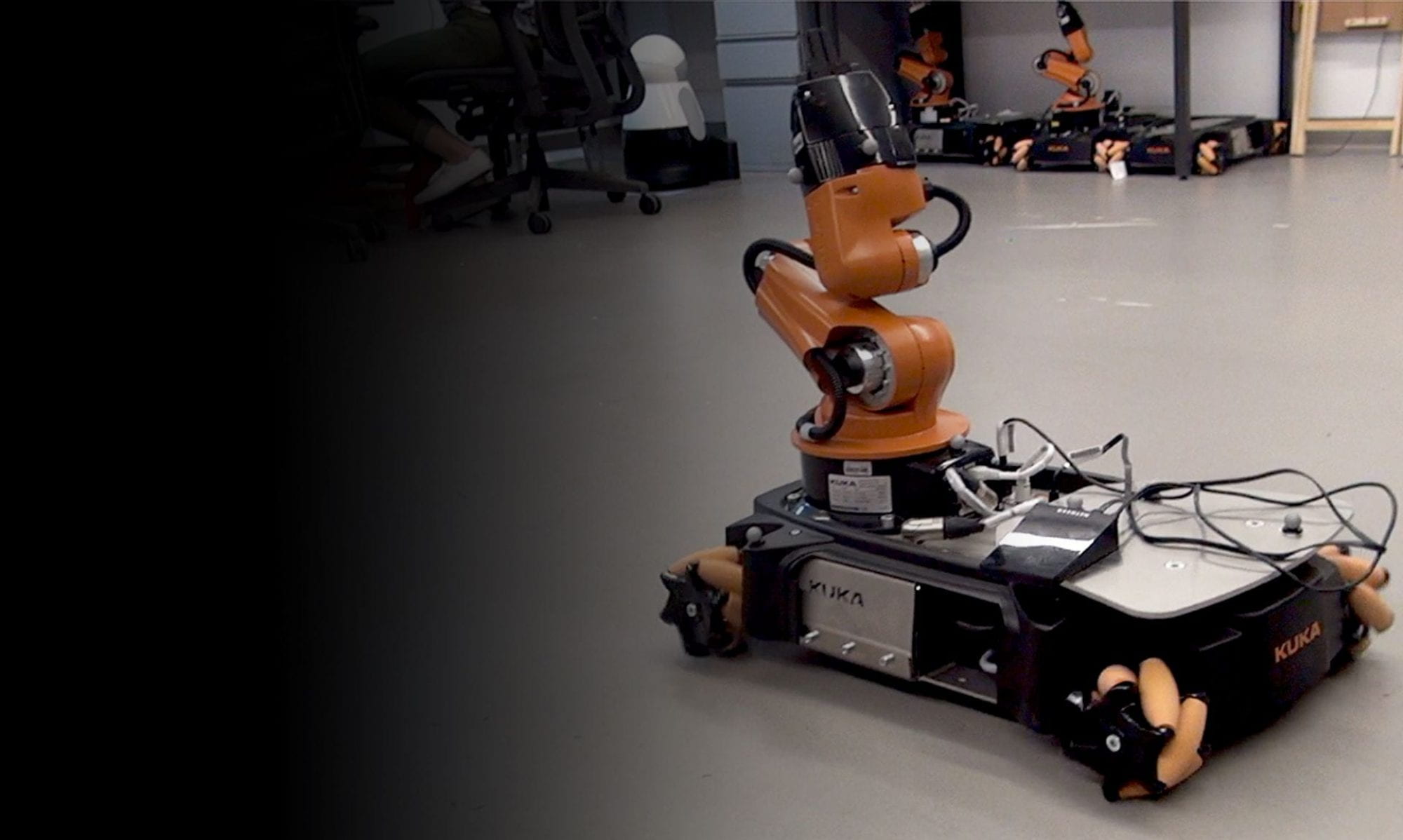

Abstract: The use of robots for complex manipulation tasks is currently challenged by the limited ability of robots to construct a rich representation of the activity at both the motion and tasks levels in ways that are both functional and apt for human-supervised execution. For instance, the operator of a remote robot would benefit from planning assistance, as opposed to the currently used method of joint-by-joint direct teleoperation. In manufacturing, robots are increasingly expected to execute manipulation tasks in shared workspace with humans, which requires the robot to be able to predict the human actions and plan around these predictions. In both cases, it is beneficial to deploy systems that are capable of learning skills from observed demonstrations, as this would enable the application of robotics by users without programming skills. However, previous work on learning from demonstrations is limited in the range of tasks that can be learned and generalized across different skills and different robots. I this talk, I present C-LEARN, a method of learning from demonstrations that supports the use of hard geometric constraints for planning multi-step functional manipulation tasks with multiple end effectors in quasi-static settings, and show the advantages of using the method in a shared autonomy framework.

Speaker Bio: Claudia Pérez D’Arpino is a PhD Candidate in the Electrical Engineering and Computer Science Department at the Massachusetts Institute of Technology, advised by Prof. Julie A. Shah in the Interactive Robotics Group since 2012. She received her degrees in Electronics Engineering (2008) and Masters in Mechatronics (2010) from the Simon Bolivar University in Caracas, Venezuela, where she served as Assistant Professor in the Electronics and Circuits Department (2010-2012) with a focus on Robotics. She participated in the DARPA Robotics Challenge with Team MIT (2012-2015). Her research at CSAIL combines machine learning and planning techniques to empower humans through the use of robotics and AI. Her PhD research centers in enabling robots to learn and create strategies for multi-step manipulation tasks by observing demonstrations, and develop efficient methods for robots to employ these skills in collaboration with humans, either for shared workspace collaboration, such as assembly in manufacturing, or for remote robot control in shared autonomy, such as emergency response scenarios.